For the life of me, I can’t remember why I set the HE GIF tunnel MTU to 1444 bytes. In any event, let’s see if the tunnel really is capable of sending a 1444-byte IPv6 packet.

ICMP ‘ping’ is probably the easiest way I can think of to check this. The data length chosen should be:

data length = MTU − ICMP packet length − IP packet length

In my case, that’s:

data length = 1444 − 8 − 40 = 1396

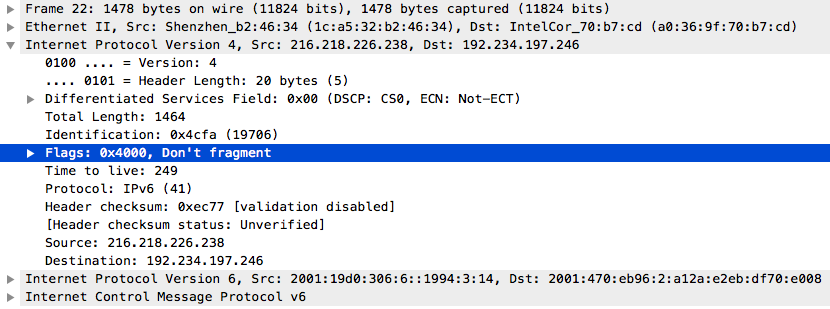

Back to the Linux server to test this. The -M do part sets the DF (Don’t Fragment) bit.

fission@nucleus[pts/11]:~% ping6 -M do -c 1 -s 1396 mirrors.pdx.kernel.org

PING mirrors.pdx.kernel.org(mirrors.pdx.kernel.org) 1396 data bytes

1404 bytes from mirrors.pdx.kernel.org: icmp_seq=1 ttl=57 time=25.3 ms

[...]

Success! If I try one more byte, it should fail:

fission@nucleus[pts/11]:~% ping6 -M do -c 1 -s 1397 mirrors.pdx.kernel.org

PING mirrors.pdx.kernel.org(mirrors.pdx.kernel.org) 1397 data bytes

From mirrors.pdx.kernel.org icmp_seq=1 Packet too big: mtu=1444

[...]

Perfect! It even tells me the MTU right there.

This also works the other way around:

[ec2-user@ip-10-0-0-43 ~]$ ping6 -M do -c 1 -s 1396 nucleus.ldx.ca

PING nucleus.ldx.ca(nucleus.ldx.ca (2001:470:eb96:2::2)) 1396 data bytes

1404 bytes from nucleus.ldx.ca (2001:470:eb96:2::2): icmp_seq=1 ttl=35 time=189 ms

[...]

[ec2-user@ip-10-0-0-43 ~]$ ping6 -M do -c 1 -s 1397 nucleus.ldx.ca

PING nucleus.ldx.ca(nucleus.ldx.ca (2001:470:eb96:2::2)) 1397 data bytes

From tserv1.sea1.he.net (2001:470:0:9b::2) icmp_seq=1 Packet too big: mtu=1444

[...]

Notice that the ICMPv6 “packet too big” is coming from the tunnel endpoint.

Let’s see if anyone is monkeying around with the MSS value in IPv6 TCP connections. To do this, I spun up an IPv6-enabled host in AWS for the remote side, so I could packet capture on both ends.

The command I ran on the client (local) side:

fission@nucleus[pts/11]:~% curl -I 'http://[2a05:d018:859:9f00:fb11:b53b:d674:2439]'

HTTP/1.1 403 Forbidden

Date: Wed, 14 Nov 2018 07:56:42 GMT

Server: Apache/2.4.34 ()

[...]

The packet capture from the local (client) side:

23:56:42.524388 IP6 2001:470:eb96:2::2.35221 > 2a05:d018:859:9f00:fb11:b53b:d674:2439.80: Flags [S], seq 895111178, win 28800, options [mss 1440,sackOK,TS val 359807423 ecr 0,nop,wscale 7], length 0

23:56:42.707159 IP6 2a05:d018:859:9f00:fb11:b53b:d674:2439.80 > 2001:470:eb96:2::2.35221: Flags [S.], seq 1704057741, ack 895111179, win 26787, options [mss 1440,sackOK,TS val 3793742308 ecr 359807423,nop,wscale 7], length 0

And from the remote (AWS) side:

07:56:42.641083 IP6 2001:470:eb96:2::2.35221 > 2a05:d018:859:9f00:fb11:b53b:d674:2439.80: Flags [S], seq 895111178, win 28800, options [mss 1440,sackOK,TS val 359807423 ecr 0,nop,wscale 7], length 0

07:56:42.641112 IP6 2a05:d018:859:9f00:fb11:b53b:d674:2439.80 > 2001:470:eb96:2::2.35221: Flags [S.], seq 1704057741, ack 895111179, win 26787, options [mss 8941,sackOK,TS val 3793742308 ecr 359807423,nop,wscale 7], length 0

Holy jumbo frames, Batman! So, yes, there is some MSS clamping going on somewhere, but it’s clamped only to the ‘standard’ value of 1440 (1500 MTU − 40-byte IPv6 header − 20-byte TCP header). This is obviously not low enough to go through my IPv6 tunnel with an MTU of 1444.

Given my configuration of an IPv6 MTU of 1444, if I cause a remote server to send more than about 1.4 K, it will need to do PMTUD, since my MSS isn’t ‘correct’ for the tunnel. Fetching the default Apache index page should do the trick:

fission@nucleus[pts/11]:~% curl -o /dev/null 'http://[2a05:d018:859:9f00:fb11:b53b:d674:2439]'

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 3630 100 3630 0 0 6717 0 --:--:-- --:--:-- --:--:-- 6709

The local (client) packet capture (important bits highlighted; IP addresses replaced for readability):

00:13:20.383768 IP6 client.37783 > awshost.80: Flags [S], seq 3916962842, win 28800, options [mss 1440,sackOK,TS val 360056888 ecr 0,nop,wscale 7], length 0

00:13:20.569823 IP6 awshost.80 > client.37783: Flags [S.], seq 3533963009, ack 3916962843, win 26787, options [mss 1440,sackOK,TS val 3794740167 ecr 360056888,nop,wscale 7], length 0

00:13:20.569881 IP6 client.37783 > awshost.80: Flags [.], ack 1, win 225, options [nop,nop,TS val 360056935 ecr 3794740167], length 0

00:13:20.569960 IP6 client.37783 > awshost.80: Flags [P.], seq 1:105, ack 1, win 225, options [nop,nop,TS val 360056935 ecr 3794740167], length 104: HTTP: GET / HTTP/1.1

00:13:20.755458 IP6 awshost.80 > client.37783: Flags [.], ack 105, win 210, options [nop,nop,TS val 3794740353 ecr 360056935], length 0

00:13:20.761947 IP6 awshost.80 > client.37783: Flags [P.], seq 2857:3915, ack 105, win 210, options [nop,nop,TS val 3794740354 ecr 360056935], length 1058: HTTP

00:13:20.761957 IP6 client.37783 > awshost.80: Flags [.], ack 1, win 242, options [nop,nop,TS val 360056983 ecr 3794740353,nop,nop,sack 1 {2857:3915}], length 0

00:13:20.923726 IP6 awshost.80 > client.37783: Flags [P.], seq 2745:3915, ack 105, win 210, options [nop,nop,TS val 3794740354 ecr 360056935], length 1170: HTTP

00:13:20.923739 IP6 client.37783 > awshost.80: Flags [.], ack 1, win 264, options [nop,nop,TS val 360057023 ecr 3794740353,nop,nop,sack 2 {2857:3915}{2745:3915}], length 0

00:13:20.923746 IP6 awshost.80 > client.37783: Flags [.], seq 1:1373, ack 105, win 210, options [nop,nop,TS val 3794740354 ecr 360056935], length 1372: HTTP: HTTP/1.1 403 Forbidden

00:13:20.923755 IP6 client.37783 > awshost.80: Flags [.], ack 1373, win 287, options [nop,nop,TS val 360057023 ecr 3794740354,nop,nop,sack 1 {2745:3915}], length 0

00:13:20.923889 IP6 awshost.80 > client.37783: Flags [.], seq 1373:2745, ack 105, win 210, options [nop,nop,TS val 3794740354 ecr 360056935], length 1372: HTTP

00:13:20.923894 IP6 client.37783 > awshost.80: Flags [.], ack 3915, win 309, options [nop,nop,TS val 360057023 ecr 3794740354], length 0

00:13:20.924126 IP6 client.37783 > awshost.80: Flags [F.], seq 105, ack 3915, win 309, options [nop,nop,TS val 360057023 ecr 3794740354], length 0

00:13:21.109162 IP6 awshost.80 > client.37783: Flags [F.], seq 3915, ack 106, win 210, options [nop,nop,TS val 3794740707 ecr 360057023], length 0

00:13:21.109185 IP6 client.37783 > awshost.80: Flags [.], ack 3916, win 309, options [nop,nop,TS val 360057069 ecr 3794740707], length 0

The remote (server) packet capture (important bits highlighted; IP addresses replaced for readability):

08:13:20.504327 IP6 client.37783 > awshost.http: Flags [S], seq 3916962842, win 28800, options [mss 1440,sackOK,TS val 360056888 ecr 0,nop,wscale 7], length 0

08:13:20.504364 IP6 awshost.http > client.37783: Flags [S.], seq 3533963009, ack 3916962843, win 26787, options [mss 8941,sackOK,TS val 3794740167 ecr 360056888,nop,wscale 7], length 0

08:13:20.690332 IP6 client.37783 > awshost.http: Flags [.], ack 1, win 225, options [nop,nop,TS val 360056935 ecr 3794740167], length 0

08:13:20.690342 IP6 client.37783 > awshost.http: Flags [P.], seq 1:105, ack 1, win 225, options [nop,nop,TS val 360056935 ecr 3794740167], length 104: HTTP: GET / HTTP/1.1

08:13:20.690362 IP6 awshost.http > client.37783: Flags [.], ack 105, win 210, options [nop,nop,TS val 3794740353 ecr 360056935], length 0

08:13:20.690654 IP6 awshost.http > client.37783: Flags [.], seq 1:1429, ack 105, win 210, options [nop,nop,TS val 3794740354 ecr 360056935], length 1428: HTTP: HTTP/1.1 403 Forbidden

08:13:20.690662 IP6 awshost.http > client.37783: Flags [.], seq 1429:2857, ack 105, win 210, options [nop,nop,TS val 3794740354 ecr 360056935], length 1428: HTTP

08:13:20.690667 IP6 awshost.http > client.37783: Flags [P.], seq 2857:3915, ack 105, win 210, options [nop,nop,TS val 3794740354 ecr 360056935], length 1058: HTTP

08:13:20.857632 IP6 2001:470:0:9b::2 > awshost: ICMP6, packet too big, mtu 1444, length 1240

08:13:20.857662 IP6 awshost.http > client.37783: Flags [.], seq 1:1373, ack 105, win 210, options [nop,nop,TS val 3794740354 ecr 360056935], length 1372: HTTP: HTTP/1.1 403 Forbidden

08:13:20.857664 IP6 awshost.http > client.37783: Flags [.], seq 1373:2745, ack 105, win 210, options [nop,nop,TS val 3794740354 ecr 360056935], length 1372: HTTP

08:13:20.857669 IP6 awshost.http > client.37783: Flags [P.], seq 2745:3915, ack 105, win 210, options [nop,nop,TS val 3794740354 ecr 360056935], length 1170: HTTP

08:13:20.860396 IP6 2001:470:0:9b::2 > awshost: ICMP6, packet too big, mtu 1444, length 1240

08:13:20.882134 IP6 client.37783 > awshost.http: Flags [.], ack 1, win 242, options [nop,nop,TS val 360056983 ecr 3794740353,nop,nop,sack 1 {2857:3915}], length 0

08:13:21.044163 IP6 client.37783 > awshost.http: Flags [.], ack 1, win 264, options [nop,nop,TS val 360057023 ecr 3794740353,nop,nop,sack 2 {2857:3915}{2745:3915}], length 0

08:13:21.044175 IP6 client.37783 > awshost.http: Flags [.], ack 1373, win 287, options [nop,nop,TS val 360057023 ecr 3794740354,nop,nop,sack 1 {2745:3915}], length 0

08:13:21.044179 IP6 client.37783 > awshost.http: Flags [.], ack 3915, win 309, options [nop,nop,TS val 360057023 ecr 3794740354], length 0

08:13:21.044182 IP6 client.37783 > awshost.http: Flags [F.], seq 105, ack 3915, win 309, options [nop,nop,TS val 360057023 ecr 3794740354], length 0

08:13:21.044239 IP6 awshost.http > client.37783: Flags [F.], seq 3915, ack 106, win 210, options [nop,nop,TS val 3794740707 ecr 360057023], length 0

08:13:21.229463 IP6 client.37783 > awshost.http: Flags [.], ack 3916, win 309, options [nop,nop,TS val 360057069 ecr 3794740707], length 0

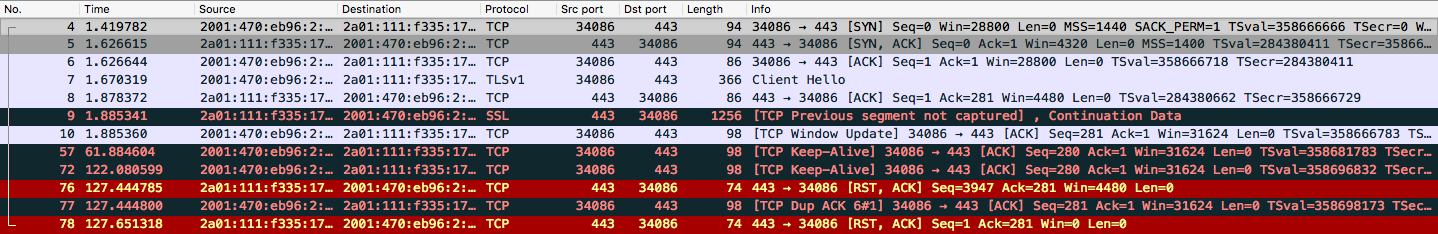

Here one can see PMTUD in action:

- The web server sent three packets, two of length 1428, and one of 1058; only the packet of length 1058 made it through.

- In response to the oversized packets, 2001:470:0:9b::2 (tserv1.sea1.he.net, the tunnel endpoint) interjected with an ICMPv6 packet, giving the client’s MTU to the web server. The client never actually saw this, or knew it was happening.

- The web server then sent three new packets, two each of length 1372, and one of 1170 – now fitting into the client’s MTU.

This works in reverse, too; I won’t show the full capture here again, but the important bit is:

01:00:36.856242 IP6 2001:470:eb96:2::1 > 2001:470:eb96:2::2: ICMP6, packet too big, mtu 1444, length 1240

Here my local router (the local end of the tunnel) is advising my local web server to send less data. (Come to think of it, this would happen for practically every IPv6 connection where any significant amount of data is sent…)

One note if you’re running tests like these: the MTU value for a remote system can be cached. So it’s worth checking that using ip route get to address and letting the cache expire before re-running a test:

[ec2-user@ip-10-0-0-43 ~]$ ip route get to 2001:470:eb96:2::2

2001:470:eb96:2::2 from :: via fe80::f3:28ff:fe6c:f9ac dev eth0 src 2a05:d018:859:9f00:fb11:b53b:d674:2439 metric 0

cache expires 165sec mtu 1444 hoplimit 64 pref medium